Precision and Accuracy

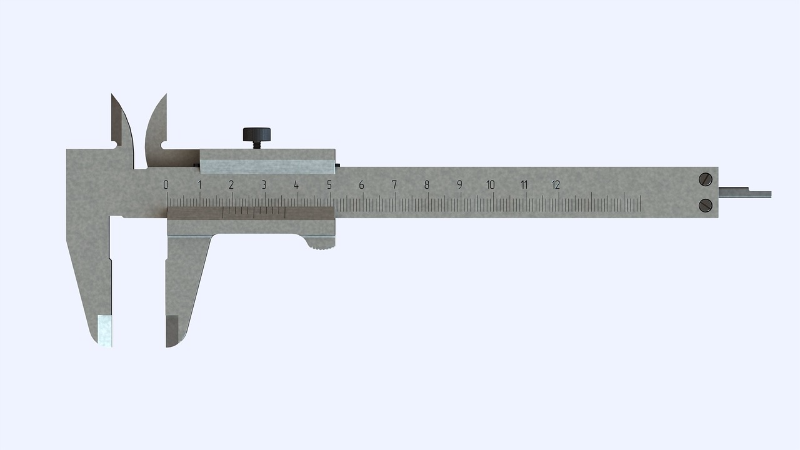

The two terms precision and accuracy are often thought to be synonyms, but have rather different meanings in experimental science. A precise instrument is one that gives the same reading repeatably when measuring the same quantity. If you have a digital scale at home for measuring your weight, try standing on it a few times. If it gives the same reading again and again, it is precise. Precision also depends on the number of decimal places provided by the scale or instrument. If the scale only reads to the nearest whole pound (or kg), as compared to the nearest tenth, the one that measures tenths is more precise. In this sense, a caliper is more precise than a ruler since more decimals of precision can be obtained with a caliper.

The precision of a value is related to the number of significant digits it contains. If you don't know about significant digits, I have embedded the Wikipedia site below. Keeping track of significant digits is a nice way of doing bookkeeping such that appropriate precision is recorded in numbers and used in subsequent calculations. Such bookkeeping is not perfect, but is a common practice in sciences and engineering. This first example will be meaningful whether you know about significant digits or not:

Let's suppose a baby is said to weigh 4 pounds (our son was born even smaller than this as a preemie). When we hear this weight, we are to assume that its weight has been rounded to the nearest whole pound. When we round to the nearest pound, we have a maximum error of half a pound. It's called maximum error since if it were greater than half a pound the value would have rounded to the next larger or smaller integer pound. The maximum error is also referred to as the absolute error of the measurement. This means the child's weight should be written as . It is common practice when recording values to match the precision of the measurement and the associated error. In this case they both have tenths place precision, so we add the decimal point and the zero to the 4 lbs in this case. This is only justified if we have an error with it. Adding the zero without an error is borderline criminal, because it claims you have a 10 time more precise measurement than you in fact had.

Realize that as a percentage, the error is 0.5/4=0.125=12.5%! This is the relative error of the measurement. It is the relative error that we expect a precision instrument to minimize if it's the right tool for the job. When thinking of relative error, we can see the trouble with only recording single digits. Furthermore, the smaller the digit, the worse the relative error becomes. A two pound child's weight would have an associated 25% error! This error is easily reduced by using a scale capable of measuring another decimal place or more.

Significant Digits (or Figures)

Precision versus Accuracy

So what is accuracy? Accuracy is all about whether the value is actually correct. You could have a miscalibrated scale in a chemistry lab that is capable of reading mass down to a thousandth of a gram that consistently gives the same, incorrect mass of a sample to a high level of precision, but the reading isn't accurate.

Throwing darts is an example often used to contrast precision and accuracy. A precise (but not accurate) dart thrower will make tight clusters that are not necessarily anywhere near the bulls-eye. They might in fact throw a tight cluster of darts that get stuck in the drywall next to the board.

An accurate (but not precise) thrower will make a wide cluster that centers on the bulls-eye. The better instrument and the better dart-thrower is the more precise one with tight clusters. It is expected that with a little adjustment (calibration) that the precise instrument (and dart thrower) will become both precise and accurate.

In this sense, problems with accuracy are easy to fix by calibration. Problems with precision are much more difficult to deal with. Thus, the price of an instrument rises with its precision.

Digital Instruments

Inexpensive micro-controllers (little programmable computers) like the Arduino have 12-bit precision when reading analog signals and converting to digital, which is the way computers store their data. A bit is simply a single register that stores a binary digit one or zero. It is called binary since the result is one of two (bi) options.

For a 12-bit measurement, it means that over the full range of values measured by the micro-controller, there are 2b different values it could register, where b is the number of bits. In the case of the Arduino where b=12, that makes 4096 values. The smallest signal registers as a 0, and the largest as 4095 (one less so that with zero there are 4096 values). These values must then be scaled to represent physical quantities like temperature or voltage or light intensity.

If we were using an Arduino to measure a voltage, the smallest, non-zero value of voltage it could measure is the maximum measurable voltage divided by 4096. The max value it can register is able to be modified by simple circuits, but without doing anything special the maximum is 5 V (volts). This means the precision of voltage measurement is 5V/4096=1.221mV, assuming the 5V maximum is exact and doesn't affect the precision. This precision really is related to the error inherent in the device. For this reason it is referred to as the quantization error, or the error associated with the smallest quantity measurable by the device. We know that the true value of the measurement will be rounded to the nearest binary value. Thus the maximum error or absolute error e is 1.221mV/2, or half the quantization error.

It should be noted that the standard algorithms in place for ADC (analog to digital conversion) tend to round down. This has subtle consequences that will affect the absolute error in a way that depends on the magnitude of the reading. Depending on the details of the code running on the unit, the absolute error may - in the worst cases - be twice the quantization error rather than half of it. That would put us right back to an error equal to the quantization error. We will not discuss this in any more detail, but if you go into electrical engineering you certainly will someday.

The range of possible values an instrument can report is referred to as the FSR, or Full Scale Range. In this case the maximum voltage is 5 and the minimum is zero, so VFSR=5V. Some instruments will measure negative values as well. If a thermometer measures up to 200 F (Fahrenheit) and down to -50 F, the FSR is 250 F. We can write the error as e = VFSR/2b/2 = VFSR/2(b+1), or one half of the quantization error. Keep in mind that this ignores the rounding algorithm just mentioned above.

If we record values of voltage measured by this Arduino micro-controller we would need to write the values as, for instance , where we use half of the error e. Notice how unfortunately the quantity called voltage and the unit of voltage (the volt) are attributed the same symbol V. Notice also that I did not keep all the digits on the error term, but matched them to the measurement value's precision. In actual practice, the number of digits that I can justify keeping is really related to the precision of my VFSR. Here it is assumed to be 5.0000 V - not likely in practice with an Arduino.

Quantization Error and Common Instruments

The notion of quantization error relates to common measurement instruments as well as to digital ones. Consider a ruler with rulings for millimeters on it. In this case the millimeter plays the role of the quantization error, and we are to consider the maximum error e to be half this quantization error, or e=0.5mm.

If you have a digital scale at home that reads to the nearest pound, what do you suppose the quantization error and associated maximum error should be? The quantization error is 1 lb, and the maximum error e is half a pound. But that's only if it doesn't employ a rounding down algorithm. If it does, you might weigh 159.99 and it'll report 159 instead of 160. I think getting a scale with a tenths readout is a better idea so that whether rounding down or not, it isn't a big deal.